Anger has been growing around Apple’s AI-generated notifications on people’s iPhones that have – multiple times – been incorrectly summarising new stories.

For example, before he’d even played the match, Apple’s AI was claiming that the BBC were reporting that Luke Littler had won the PDA Darts World Championship:

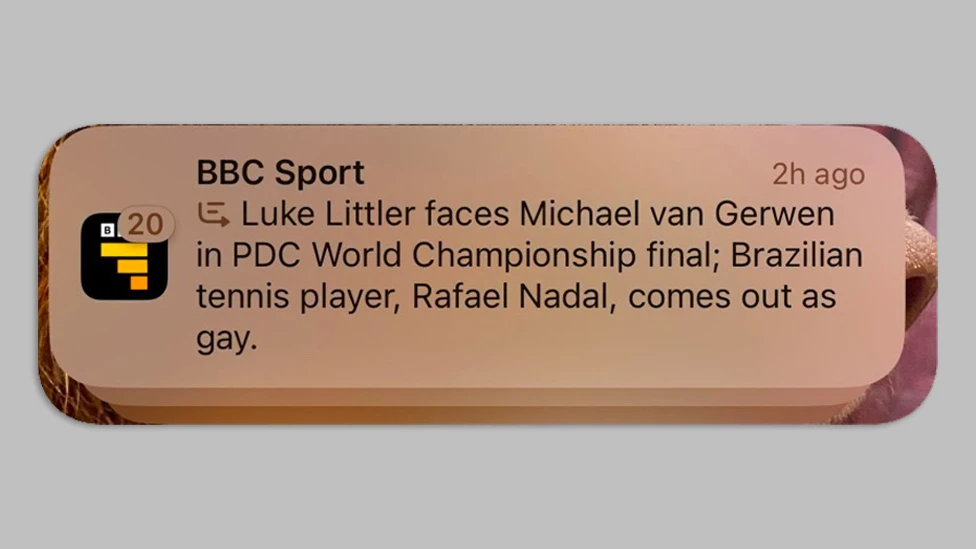

The same system was also happily reporting that Rafael Nadal had come out as gay:

… even though the story was really about about “trailblazing Brazilian gay tennis player Joao Lucas Reis da Silva and the impact of his openness about his sexuality on the sport more widely.”

These issues are particularly worrying becuase the BBC – and other similar organisations – have no control over how Apple is generating these summaries, and thus have very major concerns about how trust in them as sources of reliable news is being eroded by a third party.

What’s extraordinary is that Apple appears to be doing little to sort a problem that’s clearly pretty significant. The system isn’t working, is producing crap results… and yet it’s still being run.

Why? It appears to be part of the incessant hype-machine around AI content. It’s AI, so we can’t withdraw it! Even though these GenAI / LLM systems are notorious for producing crap.

All of this is impacting trust in… everything, and that has a very real impact in terms of social cohesion and engagement, and how much people will bother to think about whether they are reading things that are true, or even if that matters.

With so much division, misinformation and bullshit politics all around the world right now, this really does matter. Apple is a super-rich company, and it ought to be rushing to fix ‘bugs’ or remove functionalities that are not working. If this was another feature than AI, it feels like it would have jumped on it. But the deluding power of AI seems still to be strong…